What is Low Latency and Its Importance?

Imagine a scenario where you and your neighbors are watching the T20 Worldcup and your favorite team is a touchdown away from winning the cup. And you are waiting for the broadcast after the usual commercial. But by then, your neighbors start screaming about the victory! Gone. You missed this view in real time. Why? Because you had an issue with latency or what is usually referred to by people as ‘lag‘.

This lag occurs when there is a large volume of data to process. According to Forbes, the data capacity rose 5000% to 59 trillion GB in the last decade. So, when the volume is large, you need tools to speed up transmission, failing to achieve that can lead to lags.

So, in the post below, let us briefly discuss how this real-time pet peeve works, how it can be corrected, and the best tools to achieve low latency.

Table of Contents

What is Low Latency?

Low latency refers to a network that is capable of handling a high volume of data transmissions with reduced delay or lag. It can be widely used for tasks and projects that require fast access to constantly changing information.

To put it in simple terms, Latency is the time delay between when a video is captured and when the video is displayed on the user’s screen. If the delay is less then it is called low latency, if there is a very high delay, then it is said to be of higher latency.

What is Ultra Low Latency?

Ultra low latency is a computer network that is optimized to process a large amount of data packets with an extremely low delay. It’s designed to quickly access and respond to rapidly changing data in real-time.

How Does Latency Work?

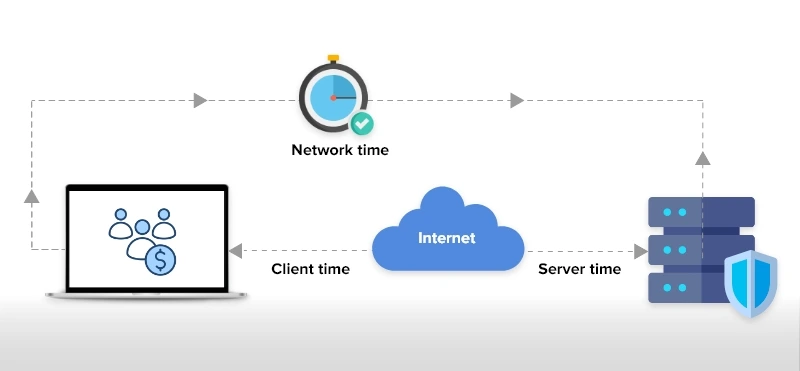

Understanding how latency works is like using a doodle. When a user starts a request, the data packets are transmitted via different communication channels over the internet to the destination and a response is hit back. Throughout this Round Trip Time (RTT), the packets should receive the end user within milliseconds.

There are 4 types of latency levels:

- Reduced Latency: This applies to all live streaming news or sports where the latency should be between 5 and 18 seconds.

- Usual Latency: Latency between 18 and 30 seconds. Applicable for non-interactive broadcasts like radio.

- Low Latency: Less than a second. Applies to all interactive use cases.

- Ultra-low Latency: Under 300 milliseconds and it’s most sought-after for audio and video calls, and video conferencing solutions.

Importance of Low Latency

As discussed above, not every use case requires the need to maintain latency levels, but if your business is into real-time communications or live streaming then you must ensure your data streaming comes under the ultra-low latency category.

- Because a slight delay in live video calls can hamper your business deals and closures.

- Could disturb the work productivity of your colleagues during a project discussion.

- Your patients can feel exhausted if they experience breaks or lags during a virtual consultation. As telecommunication is all about ease and comfort for patients.

So, how can you keep your latency levels in check? Next, it is!!

What are the Factors Affecting Low Latency?

The streaming latency is affected by several factors and in your quest to achieve low latency for all your video calls or data transfers, you must come over these limitations:

- Bandwidth: If you have a higher bandwidth, your data will travel faster. A higher bandwidth means faster delivery and minimal traffic.

- Use of Encoder: Encoders must be optimized in such a way that it instantly sends a signal to the receiver.

- Optic Fibers: The connection type affects data transmission. We suggest you use optic fibers instead of wireless internet.

- Long Distance: See that you are located at a closer distance from ISP, satellites, and internet hubs. Because long distances can increase the delay.

- File Size: A larger file size takes a long time to transmit data via the internet thereby increasing the streaming latency.

What we saw are some of the factors that demote us from reducing latency. But there are also,

Ways To Improve Low Latency

Simply using strong internet connections, the right infrastructure, maintaining physical distance, and a good encoder can reduce the latency of your live streams.

Additionally, these steps can also improve high latency levels:

- Using smart network interface cards and programmable network switches.

- Identifying and monitoring the IT infrastructure.

- Analyzing the networking issue and investing in cloud infrastructures.

- Use of streaming protocols like WebRTC, RTMP, and FTL for delivering low-latency video streams.

- Use of APIs as any voice or video API providers will make sure to design their codes with minimal latency. So, try to integrate API providers like MirrorFly to your chat platforms for seamless and smooth audio/video transmissions.

- An experienced web developer or skilled tech-stack professional who knows code minification to reduce the loading time. And finally,

- Evaluate which network function can be off-loaded to a Field Programmable Gate Array (FPGA).

So far we saw what best can be done to reduce the latency of your live streams, but the actual elephant in the room is the latency solutions for different use cases.

Recommended Reading

Benefits of Low Latency for Different Use Cases

When it comes to real-time applications, the issue with high latency can mostly ruin the potential engagement on a platform. So, it is very important for any application requiring low latency value to retain and improve overall customer engagement.

Here we will see the situations where low latency is called for:

- Online Video Games: Gamers need to chat with their peers instantly and the games they play on any device must reflect the action in real time without any lag. In case of high latency, gamers are not going to stick to your product.

- E-learning: Have you thought about if there is a lag when a live classroom session is on, what will happen to students learning? Then your app will not do any good to their education.

- Healthcare Apps: These days, consultations have gone virtually and patients connect with doctors over a video call. In case they encounter a lag in video reception, they are going to go in person.

- Enterprise Chats: When remote work was a boon, real-time chat apps were in great demand. Voice calls and video calls were of great help to colleagues to manage their project deadlines. A delay due to a network issue is going to burden their workload.

- High-Frequency Trading: Today, low-latency trading in the financial market is a regular practice. It has opened up ways for unique advantages in network services. Here, the latency is used to get the information faster than any other trader.

Wrapping Up

We hope this post helped you learn everything you need to know about low latency. As discussed above, it is important that you choose the SDKs that deliver latencies less than 100 ms, for a lag-free experience.

MirrorFly provides the fastest, lag-free and most-affordable in-app communication SDKs for Android, iOS and web apps. Moreover, we offer both cloud and self-hosted solution, to ensure that you flexibly choose how you customize and deploy your apps. Want to know more about our 100% customizable SDKs? Contact our experts today!

Drive 1+ billions of conversations on your apps with highly secure 250+ real-time Communication Features.

Contact Sales200+ Happy Clients

Topic-based Chat

Multi-tenancy Support

Frequently Asked Questions (FAQ)

Low latency is essential for delivering smooth, fast, and responsive user experiences and allowing participants to communicate without any delays in real time. If the issues related to high latency persist on using an application then users can get frustrated and shift to another application permanently.

Yes. Low latency is usually considered to be the best as users can communicate with each other or with the application without any delays or lags. Additionally, having a low latency level of anywhere between 40 to 60 ms is highly acceptable as it can improve the overall user experience and help deliver fast and seamless responses.

A low latency video is a video content that has a very low delay between when an image or video is captured in the camera and when it is displayed to viewers. In general, a low latency video is said to have a latency of less than 1 second and to achieve this level, many protocols like WebRTCs and DASH are often used.

Some of the best ways to reduce video latency and improve the quality of video calls are by increasing the bandwidth, using high-quality hardware such as high-resolution camera, using a video encoder and decoder, planting a CDN to reduce the distance between server and viewer, and using adaptive streaming protocols like HLS, DASH, and WebRTC.

A good latency speed is the shortest duration a data takes to reach the destination from the source. The lower the latency, then better the performance and a latency rate of below 100ms is considered to be great for real-time applications.

You can take the following steps to improve the video latency:

- Increasing the bandwidth and internet speed

- Moving closing to a server

- Restarting or replacing your router

- Using a CDN to distribute traffic

- If possible use a wired connection instead of a Wi-Fi

- And, improving the encoding process with the help of a codec

Further Reading

- How to Build A Flutter Video Call App in 2025?

- How to Build a React JS Video Chat App in 2025?

- How to Build an Android Voice and Video Calling App Using Java?

- Communication APIs: Top 7 In-app Chat, Voice & Video APIs

- WebRTC Video Call API: The Definitive Guide