How To Build A Custom AI Chatbot: Complete Step-by-Step Guide (2026)

AI chatbot market in 2026 valid as USD 9.5 Billion, and growing at a rate of 26.9% annually. AI chatbot’s architecture includes a Retrieval-Augmented Generation (RAG) system enabling Large Language Models (LLMs) to use external up-to-date data through vector databases, thus reducing hallucinations.

In this blog, we will discuss on this architecture and you will learn how to build a custom AI chatbot in 7 steps. Before getting started, a few key differences between a ‘custom AI chatbot’ and a ‘rule-based chatbot’.

Table of Contents

What Makes A Chatbot “Custom”?

Custom chatbots are built to meet the specific requirements of a brand and let you integrate with CRM, ERP, product databases and more. It responds accurately within linguistic context of the brand and has complete control of its logic, data handling, error recovery, and escalation flows.

Whereas, no-code/rule-based chatbots depend on templates and predefined scripts, constraining dynamic responses or contextual understanding. Rule-based chatbots don’t generate appropriate answers during multi-turn conversations, unexpected queries or slang, as they only recognize specific keywords.

Architecture Difference: Custom Chatbots vs Rule-Based Bots

| Aspects | Custom Chatbots | Rule-based Chatbots |

|---|---|---|

| 1. Core module | Natural Language Understanding, Dialogue Manager, Natural Language Generation | Rules Engine |

| 2. Knowledge Storage | Vector Database | No vector storage, knowledge is hard-coded |

| 3. Learning | Continuous learning from data | Manually only |

What Are The Benefits Of Building Custom AI Chatbot?

Top 07 Benefits Of Building a Custom AI Chatbot?

- Domain Knowledge Accuracy: AI chatbots trained on company’s proprietary data deliver responses aligned with brand’s specific industry, therefore reduce chances of misinformation.

- Sentiment Analysis: Helps de-escalating negative situations and provides empathetic, context-aware responses only.

- Automated Chat Support: Instant customer assistance 24/7, eliminating waiting times and handles FAQs outside business working hours.

- NLU + Personalization: No robotic replies. With Natural Language Understanding, the AI chatbot interprets user intent very accurately and engages in highly personalized conversations.

- Privacy & Data Control: Third-party exposure doesn’t happen, all data remains within a secure infrastructure therefore ensuring compliance with GDPR and HIPAA privacy regulations.

- Lead Qualification: Custom AI chatbot more intelligently filters qualified leads through targeted questions and behavior tracking, hence reducing much manual efforts.

- Rich Media Support: Plain text replies limit clarity, right? But AI chatbots produce images, videos, carousels, documents enhancing user engagement.

What Will Your AI Chatbot’s Strategy Be?

- Who will interact with your AI chatbot?

- What’s your custom AI chatbot’s purpose?

- What about the AI chatbot’s personality?

- Which platform do your audience interact with AI chatbot?

- What encryption methods protect your users?

Building a custom AI chatbot isn’t merely integrating an LLM and going live. Remember to outline a winning strategy and set clear goals. Without focusing on high-impact pain points in the customer journey, the AI chatbot would have less CSAT score.

Identify Your Audience: First of all, define who will interact with your chatbot, maybe it’s customers, prospects, or employees. Understanding their intent and pain points helps how your AI chatbot’s NLU interpret tone and context.

Set Clear Use Cases: Be specific on chatbot’s purpose, it varies from customer support, sales assistance, lead capture, to internal task automation. Each use case requires different features

- A multi-channel support for customer support

- Sentiment analysis, CRM integration for sales assistance

- Behavioral analysis, custom prompt for lead qualification

- Teams benefit if chatbot linked with internal databases automating repetitive tasks

Define Persona and Tone: How would the AI chatbot’s personality be? Professional tone works for enterprise users, and friendly tone works for retail audiences. If users want to be connected emotionally with your AI assistant, prioritize setting a clear persona.

Pick the Right Channels: Think where your audience should interact with the AI chatbot. On your website? mobile app? WhatsApp? or any other social platforms? However, for seamless unified experience, an omnichannel deployment or multi-channel support suggested.

Understand Privacy + Compliance: Lastly, ensure your AI chatbot uses data encryption and complies with GDPR, HIPAA global standards.

How Custom AI Chatbot Works?

Stage 1: User Input Processed

Before LLM receives any query, the chatbot processes the user input in order to ensuring clarity and continuity. Unnecessary characters in raw user input are removed, and spelling errors are corrected. For detecting language and apply an appropriate tokenizer, use langdetect or fastText. Also some autocorrection tools to integrate are:

- LanguageTool → corrects grammar

- SymSpell → corrects spelling

- GingerIt → fixes contextual spelling or grammar

Now the system understand what the user intends to do, intent classification and entity recognition happens.

- spaCy → lightweight entity & intent recognition library

- Hugging Face Transformers → library for advanced intent classification

At this point, the chatbot converts raw text into machine-understandable meaning. Now what happens is these structured inputs are passed to the context manager.

Conversation history is maintained and dialogue flow is tracked at context manager component in order to prevent redundant responses. Therefore, chat remains coherent across multiple turns.

Stage 2: LLMs Interpret User Input

Using deep neural architectures, LLM now transforms unstructured natural language of the user into structured entities with contextual meaning. Different LLMs interpret “what users mean” on how they were trained.

- OpenAI’s GPT learns user intent via human feedback and is best suited for general-purpose assistants or creative tasks

- Claude uses moral or ethical reasoning to interpret and reframe user intent, making it suitable for responsible enterprise chatbot application purpose.

- Meta’s LLaMA learns intent through a specialized dataset applicable to custom or industry chatbot use case.

- For customer support use case, supporting multi-turn conversations, Mistral is best, as it processes long user conversations and no running out of memory.

Stage 3: Memory & Personalization Module

In order to facilitate natural interactions, AI chatbot starts storing info on user history plus current session. Here, short-term memory module retains session-specific data, and long-term memory module stores historical user interactions.

- Short-Term Memory Tools: Redis, SQLite, Chroma

- Long-Term Memory Tools: PostgreSQL, MongoDB, Firebase, or a Vector Database (to store embeddings)

Note that, memory retrieval mechanism continuously updates and references stored data, passing relevant context back into LLM. Therefore, a sense of continuity established and AI chatbot “remembers” the user goals, tone or previous conversation.

Once the context reconstructed, personalization module adapts responses based on user behavior using ‘contextual prompts’ and ‘adaptive response generator’, modifying the tone and content matching user expectations.

Stage 4: Knowledge Fetching Using RAG + Vector Database

At this stage, custom chatbot start fetching real verified info using a knowledge base and RAG, but rule-based bots hallucinate when asked something outside of LLM training data.

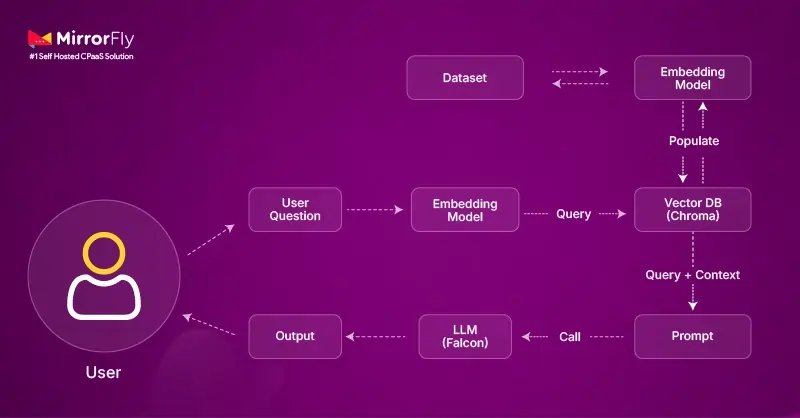

Here, the knowledge base stores company’s internal data such as policy documents, product manuals, structured proprietary databases, FAQs and more via data ingestion. The flowchart pictured below showcases the whole process in RAG pipeline.

- Data Ingestion: LlamaIndex framework ingests data sources such as PDFs or databases, and collected data is chunked efficiently, then converted into a numerical vector (embeddings) using embedding model, thus capturing the semantic meaning of the text.

- Semantic Retrieval: Now, the embeddings are stored inside the vector database within the knowledge base. Chatbot converts the user query also into a numerical vector and uses the database to retrieve the most relevant chunks semantically from database.

- Context Ranking: Results get ranked by relevance, ensuring very contextually accurate information is prioritized for the LLM model to use. Re-ranking algorithm or similarity scoring is applied to fine-tune the retrieved chunks before sending them to the LLM.

Note: Developers can use LlamaIndex for data ingestion and cloud-based vector database Pinecone for advanced semantic search and intelligent chunking of large documents. Vector database and LLM together enhance AI chatbot and prevent hallucination.

Tools for Data Ingestion

- LangChain Document Loaders (PDFLoader, CSVLoader, etc.)

- LlamaIndex Data Connectors

- Unstructured.io for cleaning messy files

Cloud-based Vector Database:

| Vector Database | Key Strengths | Use Case |

|---|---|---|

| 1. Pinecone | Fully managed serverless architecture and very scalable | Enterprise chatbots, SaaS apps |

| 2. Weaviate | Built-in vectorizer, modular schema, GraphQL API | No vector storage, knowledge is hard-coded |

| 3. Milvus | Open-source, scalable, supports billions of vectors | For large enterprise knowledge base application |

| 4. Qdrant | Built with Rust, support extensive payload filtering | Mid-size chatbot usage |

| 5. Redis Vector Store | Real-time and in-memory search, low latency | Prefered in chatbots with live or transactional data |

| 6. ElasticSearch + Vector Plugin | Hybrid combining text + vector search | For enterprise search or chat solution application |

Local Vector Database:

| Vector Database | Key Strengths | Use Case |

|---|---|---|

| 1. Chroma | Developer-friendly, AI-native design and works great with LangChain | Rapid prototyping or small chatbots |

| 2. FAISS (Meta) | Lightweight, open-source, and handle high-dimensional vector data with remarkable speed | For on-device or private chatbots |

Stage 5: Intent Routing With Conversation Manager

After the input processed and knowledge retrieved, the ‘conversation manager’ orchestrates the actions based on the user’s intent. That is, intent routing occurs, thus streamlining the integration of memory, LLM APIs, and document search into cohesive pipelines.

During multi-step approvals or advanced reporting, this component handles the custom workflows and conditional logic, automating AI chatbot complex tasks.

Stage 6: Integration & Execution Layer

Now, the API layer connects the chatbot with CRMs, ERPs, CMS, ticketing, payments, and proprietary databases, and executes the required API calls or code within the connected systems performing real operational tasks.

User interface simultaneously displays the chatbot’s responses to the user in real time. If wanted a scalable plus flexible frontend backend layers, use frameworks FastAPI or Gradio/Flask to build.

Stage 7: Response Generated

Before presenting LLM’s drafted response to the user, the system refines and moderates the output, transforming raw AI reasoning into natural language output. Perspective API detects and removes offensive or harmful content.

In response post-processing, the tone and formatting adapt based on user preferences defined earlier in the personalization module.

Stage 8: Analytics & Feedback Loop

Finally, the performance metrics such as latency, uptime, API efficiency, response accuracy, and the user experience metrics such as engagement rate, resolution success, and conversation depth are tracked.

Feedback loop ‘Model Update Pipeline’ collects user interactions, system performance, and sentiment data to fine-tune both the LLM and orchestration logic. Refined data fed back into memory layer, knowledge layer, model training layer in order to close the architecture.

How to Develop an AI Chatbot from Scratch in 7 Steps

Step 1: Structuring The Knowledge Base

Using OpenAI model, convert collected enterprise data chunks into embeddings and store them with metadata such as title, section, page in a vector database.

Semantic chunking sometimes appears to be complex and yields variable chunk sizes, but recursive chunking relies on a system of separators where it splits on high-level separators first, then moving to increasingly finer separators.

Note that, the efficient knowledge structuring highly impacts system performance metrics such as low latency and retrieval speed which are very important for real-time apps where users expect instant responses.

Step 2: Choosing AI Model + Deployment Method

For Hosted APIs, use ChatGPT, Google Gemini are recommended for developing chatbots prioritizing speed and relevance.

Self-hosted LLMs LLaMA or Mistral are applicable for industry-specific use cases, that enhances NLP in chatbots, giving full control over customization or data privacy.

And, in case of own self-hosted model, use Docker that packs the chatbot and its dependencies into a single portable container, ensuring consistency across all environments.

Step 3: Design Your Conversational Experience

Map user interactions using tools such as Dialogflow CX, Botpress, or Rasa X. Define intents, entities, and data inputs the bot needs to collect, and also create variable outputs for natural responses.

Step 4: Build The Chatbot Backend

Set up your NLU pipeline to interpret user queries, detect intent, and extract entities accurately. And start implementing error handling to manage incomplete or invalid inputs. Also, define your output styles, either short, quick answers or detailed, expandable responses based on context.

Step 5: Integrating With Website/App

Final stage of deployment involves integrating the completed chatbot backend with user-facing platforms, to provide accessible and functional user experience across all channels, including websites and mobile apps.

For a real-time communication, use MirrorFly, a white label AI-powered chat SDK, reducing the effort to building the infrastructure from scratch.

For this, you have to get the license key.

- Sign up for a MirrorFly Account

- Login to your Account

- Get the License key from the application info section

Now initialize the MirrorFly SDK using the configuration or authentication token returned from the backend. Now that chatbot connected to MirrorFly’s real-time communication features for in-app chat, voice, and video calling interactions.

Wire your chat UI to handle chatbot features such as new messages, user join/leave, notification bubble, typing indicators, delivery receipts, and more. For Android, iOS or web app, integrate the MirrorFly mobile SDK to embed the conversational and calling (Audio and Video) experience inside the native applications.

For hybrid apps that’s built using Flutter or React Native, use the platform-specific SDKs to ensure seamless real-time messaging and UI synchronization.

Next, connect lead-capture forms and webhooks to your chatbot and MirrorFly session events. From this onwards you get:

- Instant triggers for form submission, agent handoff, or ticket creation

- Automation in lead data transfer to the CRM or backend

- Unified view of user interactions across chat, call or web forms

To embed the chatbot on your website, use a lightweight JavaScript snippet initializing the chatbot UI and connect it to the backend APIs.

Step 6: Train, Test & Reinforcement

Use prompt tuning to refine responses quickly without retraining the full model, and apply supervised fine-tuning when scaling for domain-specific accuracy.

With real users conduct beta testing to identify gaps context handling or fallback logic. Now, gather feedback, analyze logs to reinforce the model, improving precision and conversational flow over time.

Step 7: Launching & On-going Optimization

At this step lastly, deploy chatbot across chosen platforms and monitor response accuracy, latency, and user satisfaction. Continuously update the knowledge base and model as your business data evolves. A/B tests on different prompts or conversation flows helps optimize for better conversion and user retention.

If you prefer ready-made platforms instead of building everything from scratch, explore the best white label AI chatbot solutions available for enterprises in 2026.

What To Choose Custom AI Chatbots Or Builder Platforms?

For most startups and enterprise businesses, builder platforms are recommended to launch quickly. But choosing custom AI chatbots or builder platforms heavily depend on enterprise needs of deployment speed, customization, budget, and available resources.

In case the business prioritizing a self-hosted chatbot solution, MirrorFly AI Chatbot is the right choice. Below is a comparison table between three:

| Aspects | Custom AI Chatbot (in-house) | Builder Platform | MirrorFly |

|---|---|---|---|

| Cost | Paying for in-house technical team including internal DevOps, ML engineers, and backend developers and expenses for model hosting, ongoing maintenance, and security hardening, adds up to a high investment | Mostly a subscription-based pricing model, and may scale up heavily in future | One-time license cost only |

| Scalability | Even though it’s very scalable, you carry the burden of infrastructure such as servers, databases, load balancers and more. | Cloud-hosted scaling is handled by the vendor, so moderate scaling for small to medium businesses, not suitable for high-volume use cases | Deploy the solution on your own infrastructure whether on private servers or a secure cloud environment, thus a horizontal scaling for any large enterprise. |

| Control | Full stack control and developers manage NLP pipelines, database schemas, API routing, and custom model integration. | Limited backend visibility, thus control is less, have to rely on the Data Processing Agreement (DPA) with the 3rd party provider for data governance | Custom AI Chatbot solution, and offers 100% customization on AI chatbot features |

| Deploy and Launch Time | Up to 6 months | 2 weeks | In 48 hrs |

Checklist For Security, Privacy & Compliance Implementation

- Build privacy into the chatbot’s design and functionality from the start with GDPR & HIPAA principles. Clearly implement an affirmative double opt-in consent node before any data collection occurs

- If using a third-party LLM, a comprehensive DPA is non-negotiable, detailing the security measures, breach notification procedures, and specifically clarifying whether user data will be used to train the vendor’s AI models

- Accidental exposure of personal data is high risk, therefore data masking and real-time redaction is a must.

- Include role-based access control and multi-factor authentication to securing the system access

- In order to mitigate prompt injections and maintain factual accuracy via RAG, go on to build a safe response policies.

For teams working with tight deadlines, this guide explains how to build a custom LLM chatbot in 48 hours using modern AI architectures.

Real-World Use Case & Mini Case Study Of AI Chatbot

1. In Fintech Banking For Customer Operations

Use case: Automated KYC verification, real-time transaction monitoring, loan eligibility checks, tailored financial advice

| Case Study | |

| Client | Citibank |

| Challenge | High volume of customer service queries leading to long wait times and inconsistent responses |

| Solution | Deployed chatbots across digital channels to handle inquiries in real time and provide consistent support |

| Result | Improved customer satisfaction through 24/7 fast responses, and reduced operational load on human agents |

2. In E-commerce For Conversions

Use case: 24×7 purchase support, custom product recommendations, proactive engagement for abandoned carts, voice + chat capabilities for multilingual shopping

| Case Study | |

| Client | Lazada Group |

| Challenge | Low customer engagement, less sales conversion, and weak loyalty in a competitive digital space |

| Solution | AI chatbots enabled personalized engagement and behavior-driven automation |

| Result | Conversion rate grew 2.3% to 5.7%, high interaction time, thus improved customer loyalty |

3. In Healthcare For Patient Engagement

Use case: Post-consultation, appointment booking, symptom-based triage using NLU + RAG, human-in-the-loop escalation.

| Case Study | |

| Client | Mayo Clinic |

| Challenge | With the rise of telemedicine, the systems faced high administrative burdens, limited accessibility, and less patient interaction |

| Solution | Chatbot equipped with NLP, ML, EHR automated post-consultation tasks, symptom checks even chronic disease monitoring |

| Result | Improved patient engagement, and lowered operational costs |

4. In Education For Learning Support

Use case: Interactive tutoring with contextual understanding + memory persistence, student progress tracking through CRM integration/LMS sync, AI-driven course suggestion.

| Case Study | |

| Client | Georgia State University |

| Challenge | Students lack personalized assistance, and teachers overloaded with administrative and feedback tasks |

| Solution | Chatbot on-demand homework help, tailor learning guidance, and feedback to students |

| Result | Students received more timely support and educators saved time on routine tasks, enabling personalized learning experiences |

5. In B2B SaaS For Product Onboarding

Use case: AI onboarding assistant, client data handling with audit logs and data segregation, issue detection via conversation analytics.

| Case Study | |

| Client | Intercom |

| Challenge | Prospects leave without engagement as website lacked real-time interaction |

| Solution | Deployed a chatbot on the pricing page that asks company size, industry, and needs, then routing high-intent leads to the sales team. |

| Result | Increased demo bookings and improved conversion rates plus ROI. |

03 Mistakes to Avoid When Building Custom AI Chatbot:

1. Avoiding Cost Escalation

Using the large LLM for all queries causes severe cost inefficiency, as high-capability LLM models are 500x more expensive per token than smaller ones. Solution includes “dynamic model routing” that matches queries to model tiers based on complexity.

Therefore, small models for routine tasks, advanced ones for complex reasoning. This approach maintains accuracy while reducing inference costs by up to 5x.

2. Not Optimizing For Context Window

Chatbot forgets the earlier parts of a conversation when failed to manage the LLM’s context window, leading to incoherent or irrelevant responses. Most AI models have only a fixed context window of 4K to 128K tokens.

Developers should implement progressive summarization techniques, condensing older conversation turns into summaries, thus significantly reducing token usage.

3. Ignoring Vector Database Performance

RAG retrieves semantically similar data chunks, and any delay directly slows down the entire workflow. Developers must ensure the vector database is optimized for low latency performance.

Focus on optimized ingestion, indexing, and searching, ensuring the system handles high-dimensional vector data effectively. Leveraging GPUs for parallel vector search and indexing can further accelerate computation and reduce response times.

Why MirrorFly Is #1 AI Chatbot Solution For Enterprises?

MirrorFly Custom AI Chatbot is a conversational API and SDK that enables businesses to integrate intelligent, human-like chatbots into any Android, iOS, or web app. The solution uses advanced NLP and NLU to understand conversational context, retain previous interactions with app users, and respond naturally like a human.

This fully customizable chatbot gives you complete data ownership, full source code access, and flexible hosting options – on-premise or in the cloud, as per your business demands.

Thinking about trying MirrorFly? Get in touch with our experts now and get started with your custom AI chatbot project.

Ready To Add a Custom AI Chatbot to Your Business Workflows?

Build a secure enterprise AI chatbot with LLM intelligence, context awareness, retrieval-augmented generation, and improved engagement.

Contact Sales24×7 automated support

Human-like responses

Grounded Responses

Frequently Asked Questions

Can I build my own AI chatbot like ChatGPT?

Yes, using open-source LLMs and LangChain framework, you can build your own AI chatbot like ChatGPT from scratch. You can also build your own AI chatbot using no-code platforms and APIs.

What’s the best AI model for custom chatbots?

GPT 4.5, LLaMA, Mixtral, Claude, Perplexity and Gemini are some best custom AI chatbot models.

How long does development take?

From a few weeks to over 6 months is the time taken to develop a custom AI chatbot, depending on the complexity and enterprise need.